Ateliar 4: Intensive 2

Ideation

For my project, I decided to explore image synthesis, specifically looking into style transfer.

After exploring the runway generative models as well as watching the lectures, I personally found style transfer particularly interesting and was curious to see how the images I input will be morphed. As an art enthusiast, I was interested in looking into art styles and examining the processes and networks while also creating some art in the process.

Specifically, I wanted to further explore content vs style within the realm of style transfer and use that context to define or redefine meaning within art. From my understanding, what enables this network is the capability of being able to differentiate between style representations and content.

My idea was to use content that is personal to me and holds meaning to me and morph them into a style that is generative.

Specifically, I wanted to create a collage of images that I have photographed and then compile them together. I then wanted to create a “network” by linking images that have a connection to me. I wanted to emulate the network visual by creating dots and then using lines to connect them to represent the generative nature of “style” in this case, maintaining the connections themselves to be intentional and not generative.

I found style transfer to be particularly relevant today in the context of digital art and NFT which is why for this project I wanted to investigate that space and understand how style transfer has an impact on art as we know it.

A lot of the comments around the use of style transfer are that it detracts from the original artist and leads to unoriginality. Although this sentiment is true in many regards, the other perspective is that the style itself must be firstly created in order for it to be generated. We are referencing an artist’s original style to create work.

Style transfer can be applied to leverage these styles to create even more complex, layered artwork.

Process and Reflection

Summary of the chosen work

The generative models I used were the Picasso model, dynamic style transfer model, adaptive style transfer model, and the Kandinsky model.

The input for these models was the content image and the style image. The output was the generated image that was combined. For these particular models, the stye image was part of the model itself, so the user did not have to input an image for that component. Some other models such as AdaIn-style transfer did have two inputs for the user which were the style and the content which I found to be just as accurate and detailed as the models that had a generative style already pre-disposed.

The models seemed to be designed to address the high-speed processing approach that is being propelled and encouraged in style transfer. This according to the author is “lacking from a principled, art historical standpoint (Sanakoyeu et al. #)” Moreover, the author speaks to how previous work in this field relied on a direct comparison of art in the domain of RGB images or CNN’s pre-trained on ImageNet. To address this, an alternative solution that leverages style-aware content loss is what is proposed and used within these models. In addition, another thing that was mentioned in the description was the proposal to measure the evaluation of the work qualitatively. They decided to have art historians rank patches to heighten accuracy. I found the example shown below to be an interesting test. The article prompted us to guess which are cut-outs from Monet’s work and which are generated. As a huge Monet fan, I didn’t get right, but it’s interesting to understand the process that goes behind the models.

The research paper referenced also delved into the encoder-decoder network based on style-aware content loss. I found the image below easy to visualize and understand the concept.

Process documentation

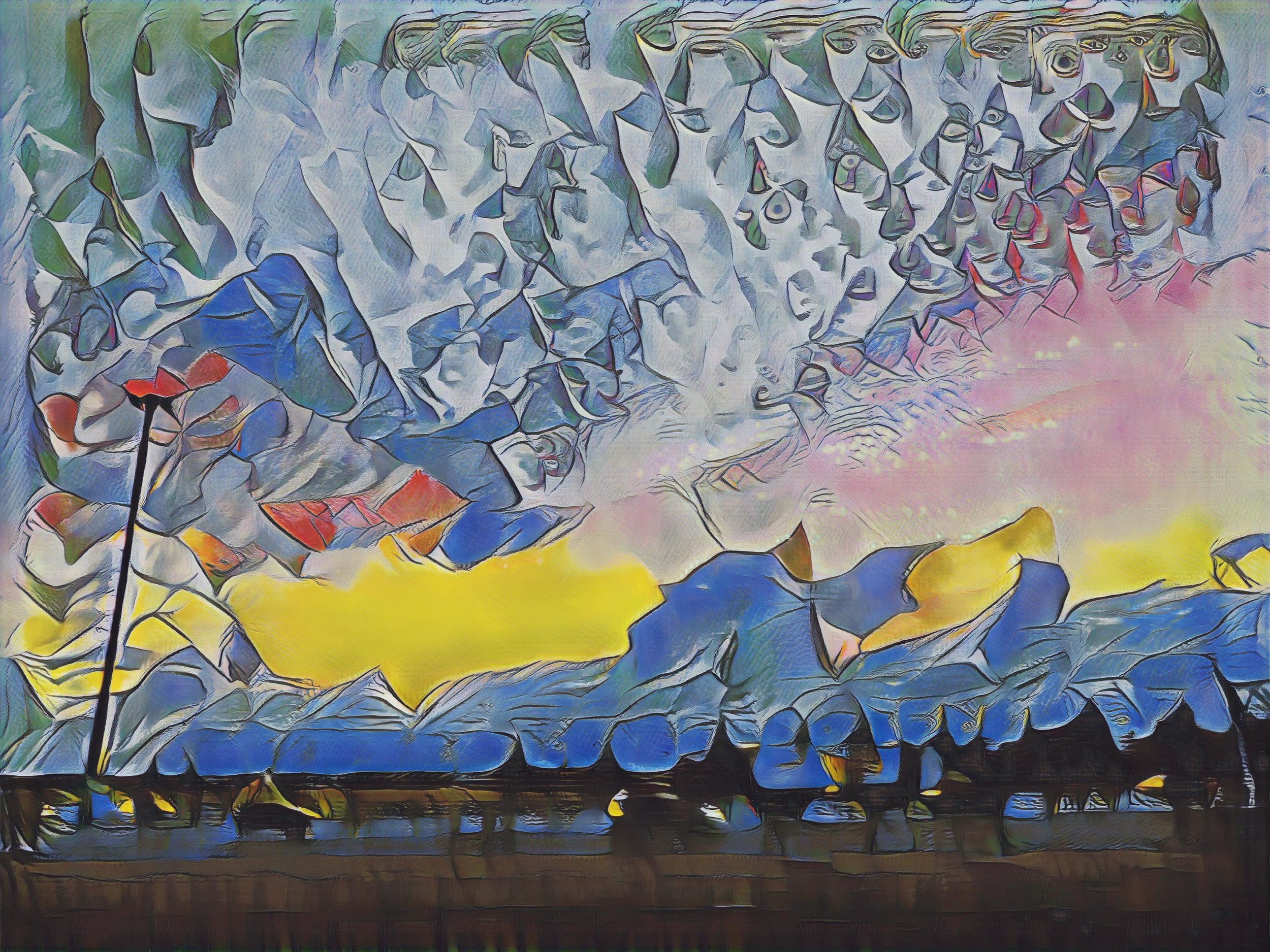

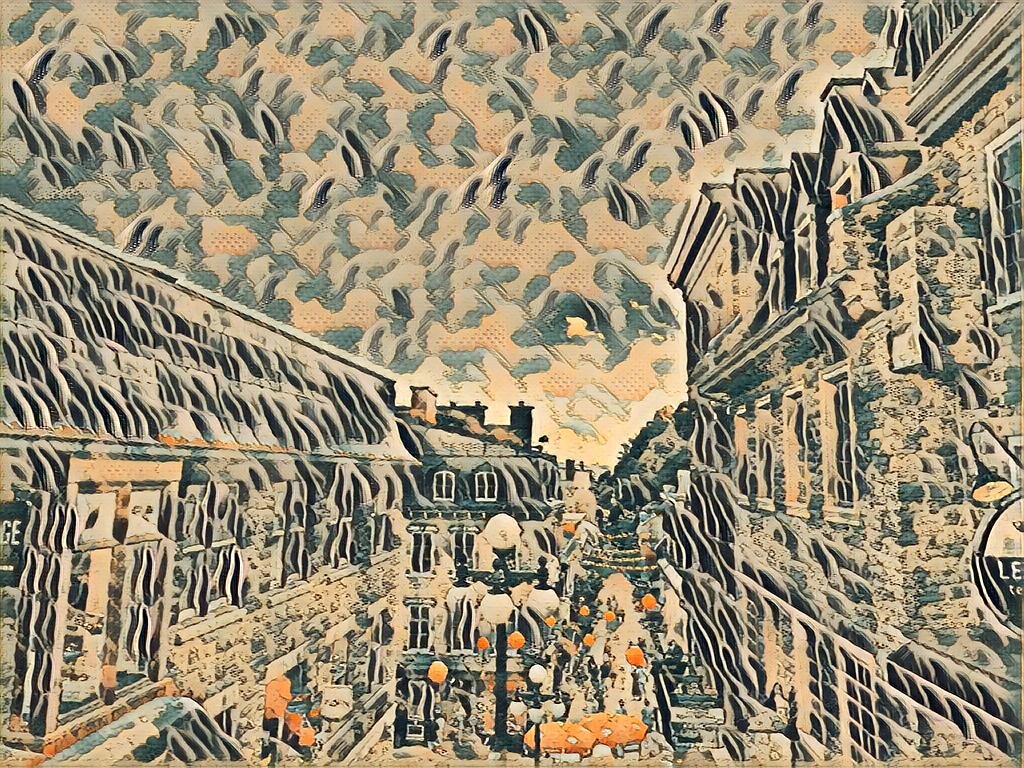

These were some of the images that were generated using the various models:

Picasso model

AdaIn- Style-Transfer

This was the model in which I inputed both the style and the content. This was one of my favorite pieces that I generated primarily due to the level of detail produced.

Adaptive Style Transfer

Kandinsky model

Dynamic Style Transfer

Overall, I did not encounter a lot of problems generating the models. Some of them did take really long and did not generate at all, or I noticed that it worked at particular times. I found reading the research papers and understanding the mechanisms to be interesting in the generating process.

Final collage

Reflection

I thoroughly enjoyed this intensive and truly learned so much. Due to time constraints as well as my amateur skills working with code, I was not able to explore Google Collab, but it will be something that I will be exploring soon. Not only was the technical aspect very interesting, but the ethical and sociological aspect of synthetic media was also equally interesting to delve into.

Works Cited

Huang, He, et al. “An Introduction to Image Synthesis with Generative Adversarial Nets.” 2018, p. 17. Accessed 24 February 2022.

Sanakoyeu, Artsiom, et al. “A Style-Aware Content Loss for Real-time HD Style Transfer.” 2018, p. 22. Accessed 27 February 2022.

Shoshan, Alon, et al. “Dynamic-Net: Tuning the Objective Without Re-training for Synthesis Tasks.” 2019, p. 9. Accessed 27 February 2022.